Albergo, Michael S., and Eric Vanden-Eijnden. 2023.

“Building Normalizing Flows with Stochastic Interpolants.” https://arxiv.org/abs/2209.15571.

Chefer, Hila, Uriel Singer, Amit Zohar, Yuval Kirstain, Adam Polyak, Yaniv Taigman, Lior Wolf, and Shelly Sheynin. 2025.

“VideoJAM: Joint Appearance-Motion Representations for Enhanced Motion Generation in Video Models.” https://arxiv.org/abs/2502.02492.

Chen, Chen, Rui Qian, Wenze Hu, Tsu-Jui Fu, Jialing Tong, Xinze Wang, Lezhi Li, et al. 2025.

“DiT-Air: Revisiting the Efficiency of Diffusion Model Architecture Design in Text to Image Generation.” https://arxiv.org/abs/2503.10618.

Dai, Bin, and David Wipf. 2019.

“Diagnosing and Enhancing VAE Models.” https://arxiv.org/abs/1903.05789.

Dhariwal, Prafulla, and Alex Nichol. 2021.

“Diffusion Models Beat GANs on Image Synthesis.” https://arxiv.org/abs/2105.05233.

Dieleman, Sander. 2024.

“Diffusion Is Spectral Autoregression.” https://sander.ai/2024/09/02/spectral-autoregression.html.

———. 2025.

“Generative Modelling in Latent Space.” https://sander.ai/2025/04/15/latents.html.

Esser, Patrick, Sumith Kulal, Andreas Blattmann, Rahim Entezari, Jonas Müller, Harry Saini, Yam Levi, et al. 2024.

“Scaling Rectified Flow Transformers for High-Resolution Image Synthesis.” https://arxiv.org/abs/2403.03206.

Falck, Fabian, Teodora Pandeva, Kiarash Zahirnia, Rachel Lawrence, Richard Turner, Edward Meeds, Javier Zazo, and Sushrut Karmalkar. 2025.

“A Fourier Space Perspective on Diffusion Models.” https://arxiv.org/abs/2505.11278.

Gerdes, Mathis, Max Welling, and Miranda C. N. Cheng. 2024.

“GUD: Generation with Unified Diffusion.” https://arxiv.org/abs/2410.02667.

Goodfellow, Ian J., Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. 2014.

“Generative Adversarial Networks.” https://arxiv.org/abs/1406.2661.

Gui, Ming, Johannes Schusterbauer, Timy Phan, Felix Krause, Josh Susskind, Miguel Angel Bautista, and Björn Ommer. 2025.

“Adapting Self-Supervised Representations as a Latent Space for Efficient Generation.” https://arxiv.org/abs/2510.14630.

Heusel, Martin, Hubert Ramsauer, Thomas Unterthiner, Bernhard Nessler, and Sepp Hochreiter. 2018.

“GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium.” https://arxiv.org/abs/1706.08500.

Ho, Jonathan, Ajay Jain, and Pieter Abbeel. 2020.

“Denoising Diffusion Probabilistic Models.” https://arxiv.org/abs/2006.11239.

Hoogeboom, Emiel, Jonathan Heek, and Tim Salimans. 2023.

“Simple Diffusion: End-to-End Diffusion for High Resolution Images.” https://arxiv.org/abs/2301.11093.

Johnson, Justin, Alexandre Alahi, and Li Fei-Fei. 2016.

“Perceptual Losses for Real-Time Style Transfer and Super-Resolution.” https://arxiv.org/abs/1603.08155.

Karras, Tero, Miika Aittala, Timo Aila, and Samuli Laine. 2022.

“Elucidating the Design Space of Diffusion-Based Generative Models.” https://arxiv.org/abs/2206.00364.

Kingma, Diederik P., and Ruiqi Gao. 2023.

“Understanding Diffusion Objectives as the ELBO with Simple Data Augmentation.” https://arxiv.org/abs/2303.00848.

Kingma, Diederik P, and Max Welling. 2022.

“Auto-Encoding Variational Bayes.” https://arxiv.org/abs/1312.6114.

Kouzelis, Theodoros, Ioannis Kakogeorgiou, Spyros Gidaris, and Nikos Komodakis. 2025.

“EQ-VAE: Equivariance Regularized Latent Space for Improved Generative Image Modeling.” https://arxiv.org/abs/2502.09509.

Kouzelis, Theodoros, Efstathios Karypidis, Ioannis Kakogeorgiou, Spyros Gidaris, and Nikos Komodakis. 2025.

“Boosting Generative Image Modeling via Joint Image-Feature Synthesis.” https://arxiv.org/abs/2504.16064.

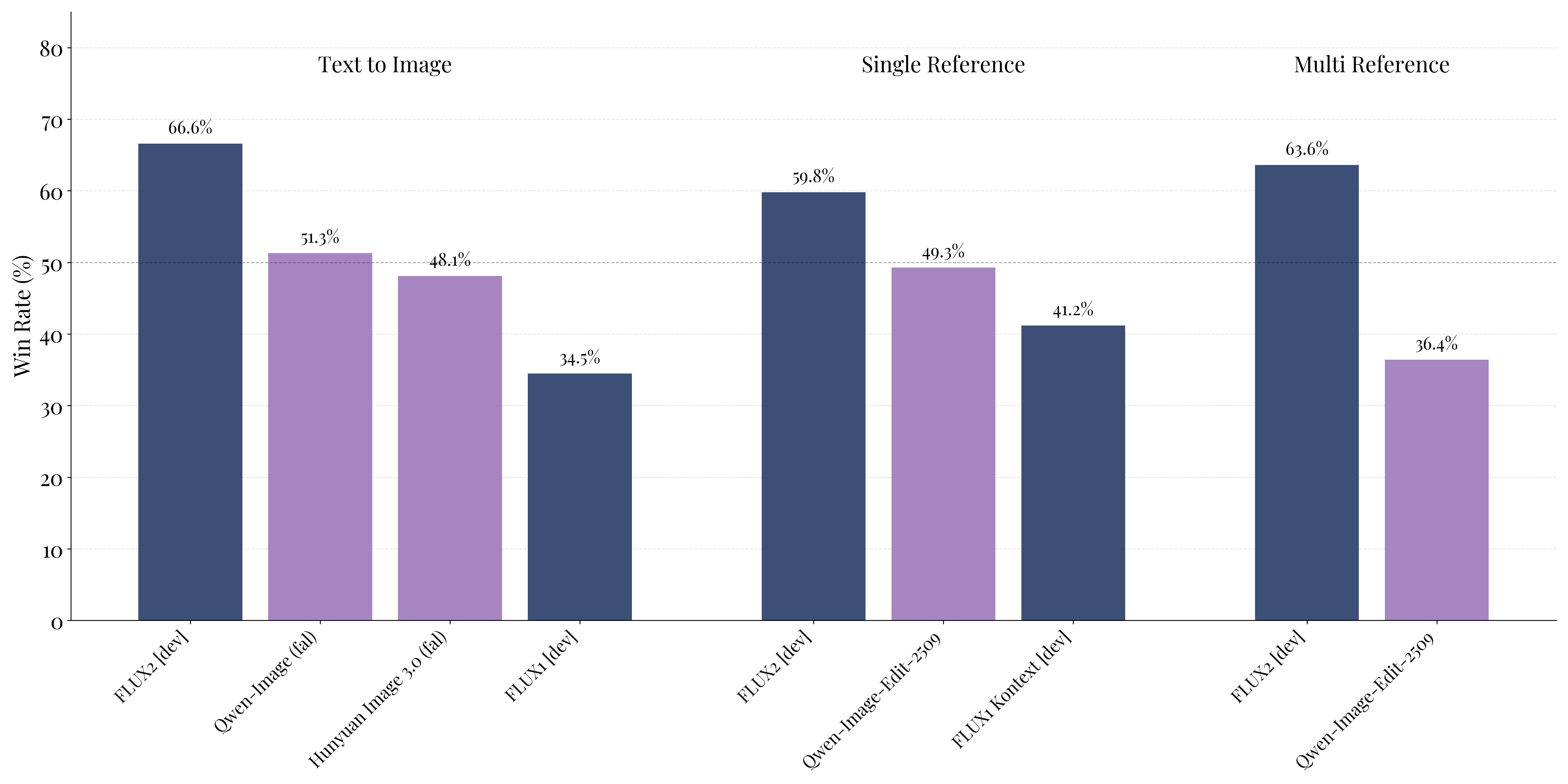

Labs, Black Forest, Stephen Batifol, Andreas Blattmann, Frederic Boesel, Saksham Consul, Cyril Diagne, Tim Dockhorn, et al. 2025.

“FLUX.1 Kontext: Flow Matching for in-Context Image Generation and Editing in Latent Space.” https://arxiv.org/abs/2506.15742.

Leng, Xingjian, Jaskirat Singh, Yunzhong Hou, Zhenchang Xing, Saining Xie, and Liang Zheng. 2025.

“REPA-e: Unlocking VAE for End-to-End Tuning with Latent Diffusion Transformers.” https://arxiv.org/abs/2504.10483.

Lipman, Yaron, Ricky T. Q. Chen, Heli Ben-Hamu, Maximilian Nickel, and Matt Le. 2023.

“Flow Matching for Generative Modeling.” https://arxiv.org/abs/2210.02747.

Liu, Xingchao, Chengyue Gong, and Qiang Liu. 2022.

“Flow Straight and Fast: Learning to Generate and Transfer Data with Rectified Flow.” https://arxiv.org/abs/2209.03003.

Ma, Nanye, Mark Goldstein, Michael S. Albergo, Nicholas M. Boffi, Eric Vanden-Eijnden, and Saining Xie. 2024.

“SiT: Exploring Flow and Diffusion-Based Generative Models with Scalable Interpolant Transformers.” https://arxiv.org/abs/2401.08740.

Oquab, Maxime, Timothée Darcet, Théo Moutakanni, Huy Vo, Marc Szafraniec, Vasil Khalidov, Pierre Fernandez, et al. 2023.

“DINOv2: Learning Robust Visual Features Without Supervision.” https://arxiv.org/abs/2304.07193.

Rezende, Danilo Jimenez, Shakir Mohamed, and Daan Wierstra. 2014.

“Stochastic Backpropagation and Approximate Inference in Deep Generative Models.” https://arxiv.org/abs/1401.4082.

Rombach, Robin, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. 2022.

“High-Resolution Image Synthesis with Latent Diffusion Models.” https://arxiv.org/abs/2112.10752.

Russakovsky, Olga, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, et al. 2014. “ImageNet Large Scale Visual Recognition Challenge.”

Skorokhodov, Ivan, Sharath Girish, Benran Hu, Willi Menapace, Yanyu Li, Rameen Abdal, Sergey Tulyakov, and Aliaksandr Siarohin. 2025.

“Improving the Diffusability of Autoencoders.” https://arxiv.org/abs/2502.14831.

Sohl-Dickstein, Jascha, Eric A. Weiss, Niru Maheswaranathan, and Surya Ganguli. 2015.

“Deep Unsupervised Learning Using Nonequilibrium Thermodynamics.” https://arxiv.org/abs/1503.03585.

Song, Yang, and Stefano Ermon. 2020.

“Generative Modeling by Estimating Gradients of the Data Distribution.” https://arxiv.org/abs/1907.05600.

Wang, Zhou, Alan Conrad Bovik, Hamid R. Sheikh, and Eero P. Simoncelli. 2004.

“Image Quality Assessment: From Error Visibility to Structural Similarity.” IEEE Transactions on Image Processing 13: 600–612.

https://api.semanticscholar.org/CorpusID:207761262.

Wu, Ge, Shen Zhang, Ruijing Shi, Shanghua Gao, Zhenyuan Chen, Lei Wang, Zhaowei Chen, et al. 2025.

“Representation Entanglement for Generation: Training Diffusion Transformers Is Much Easier Than You Think.” https://arxiv.org/abs/2507.01467.

Yao, Jingfeng, Bin Yang, and Xinggang Wang. 2025.

“Reconstruction Vs. Generation: Taming Optimization Dilemma in Latent Diffusion Models.” https://arxiv.org/abs/2501.01423.

Yu, Sihyun, Sangkyung Kwak, Huiwon Jang, Jongheon Jeong, Jonathan Huang, Jinwoo Shin, and Saining Xie. 2025.

“Representation Alignment for Generation: Training Diffusion Transformers Is Easier Than You Think.” https://arxiv.org/abs/2410.06940.

Zeng, Weili, and Yichao Yan. 2025.

“Flow Matching in the Low-Noise Regime: Pathologies and a Contrastive Remedy.” https://arxiv.org/abs/2509.20952.

Zhang, Richard, Phillip Isola, Alexei A. Efros, Eli Shechtman, and Oliver Wang. 2018.

“The Unreasonable Effectiveness of Deep Features as a Perceptual Metric.” https://arxiv.org/abs/1801.03924.

Zhou, Yifan, Zeqi Xiao, Shuai Yang, and Xingang Pan. 2025.

“Alias-Free Latent Diffusion Models: Improving Fractional Shift Equivariance of Diffusion Latent Space.” https://arxiv.org/abs/2503.09419.